At least three AI bills are proposed for the session that began Jan. 8. The Legislature passed a minor bill on AI in 2023.

This session marks the first time that the Legislature will attempt to address AI in a methodical way. Artificial intelligence is an ill-defined, rapidly evolving technology that has entered commerce, education and daily life. Laypersons, including legislators, are struggling to come to grips with AI’s social and economic impacts that are surfacing.

Sen. Karen Keiser, D-Des Moines, chairperson of the Senate Labor & Commerce Committee, plans to introduce a bill that would require companies using artificial intelligence to inform their employees and customers that AI is being used.

Rep. Travis Couture, R-Allyn, and Sen. Joe Nguyen, D-White Center, sponsored parallel bills in the House and Senate to create a task force to map out how Washington should regulate AI.

Finally, Rep. Clyde Shavers, D-Oak Harbor, is pushing House Bill 1951, which would forbid the use of AI algorithms that lead to discrimination, including in employment, health care, education, criminal justice and more.

Last session, the Legislature passed a bill by Sen. Javier Valdez, D-Seattle, to require disclosure if audio or images in a political advertisement are manipulated. Under that law, manipulating a candidate’s images and audio without that person’s consent gives them cause for legal action.

“AI is a complicated issue that we don’t fully understand. You don’t want to stop innovation. You don’t want to stop commerce. But you don’t want to stop people’s civil rights. … Right now, we’re in that moment where artificial intelligence is everywhere,” Shavers said.

In and out of Olympia, observers are saying legislating too quickly could backfire.

“The biggest pitfall is legislating out of fear and moving too quickly and not recognizing how mismatched are the pace of technology and the pace of legislation. People are in jobs that didn’t exist a year ago,” said Laura Ruderman, CEO of the Washington Technology Alliance.

Right now, the extent of Washington’s emerging AI industry is unclear.

Tech publication GeekWire has counted 132 AI startups in the Pacific Northwest. That does not include tech and non-tech companies that use AI as part of their businesses, or established companies like Microsoft and Amazon that are investing heavily in this technology.

The Washington Technology Industry Association has roughly 1,000 members. “AI is probably in most of our members’ wheelhouses,” said Kelly Fukai, the WTIA’s vice president for government affairs.

What’s clear is that Seattle has become a center for AI development and interest.

“Seattle is in the same breath as San Francisco as an AI hub. … Whenever we put AI on a [chamber] program, it’s packed with people,” said Rachel Smith, CEO of the Seattle Metropolitan Chamber of Commerce.

From science fiction to reality

While state legislatures and the federal government only recently have begun tackling legislation and regulations regarding AI, the concept has been around for millennia.

One of the first references to an AI dates back to the third century B.C. in a Greek mythological epic poem about Jason and the Argonauts. Talos was a giant automaton (a self-operating machine) made of bronze that walked the shoreline of Crete and threw boulders at ships threatening the island.

British computer pioneer Alan Turing came up with Turing’s Law in 1950, contending that a machine successfully imitated human thinking if in a written conversation between a computer and a person, an observer couldn’t tell which was which. A 1956 research conference at Dartmouth College coined the term “artificial intelligence” and is considered the beginning of the modern concept of AI.

Powered by computers being able to process bigger and bigger volumes of data faster and faster, AI has evolved in numerous complicated ways. The University of Washington has academic programs on Automated Planning & Control, Brain-Computer Interfaces & Computational Neuroscience, Computational Biology, Machine Learning, Natural Language Processing, Robotics, Graphics and Imaging.

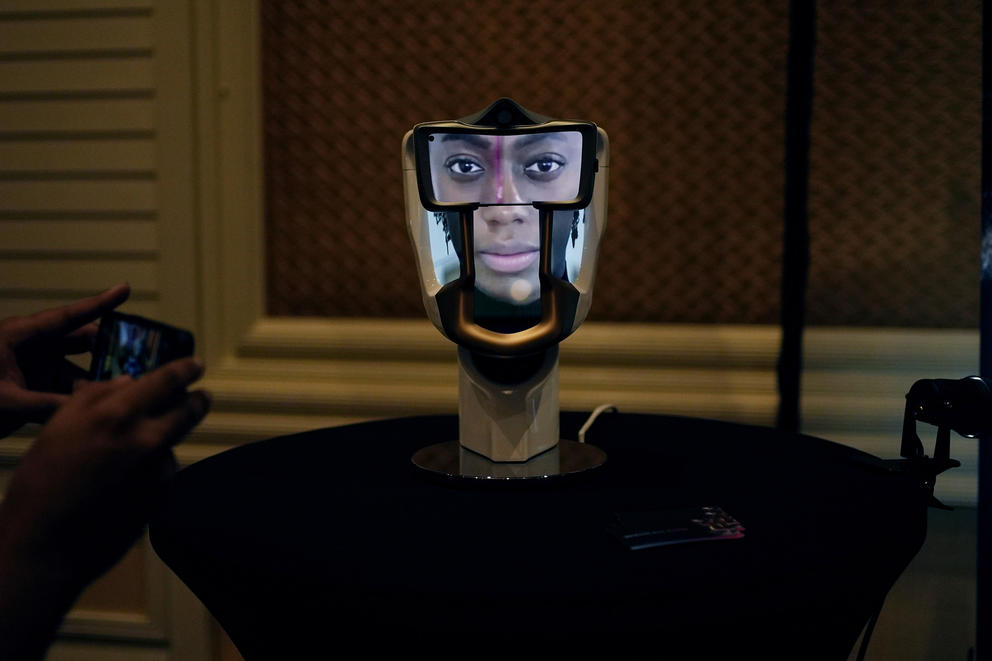

Issues previously in the realm of science fiction have become fact in recent years. These include deepfakes, facial recognition, hallucinations, AIs writing news stories and AIs being blamed for fake legal citations.

One well-known AI scandal occurred with Sports illustrated. After decades as a highly regarded literary sports news magazine, another publication accused it of using AI to write stories; Sports Illustrated denied it, but also deleted the stories in question.

For decades, humankind has wrestled with whether AI is a force for good or bad. That question continues today.

A January 2023 Monmouth University poll showed 41% of respondents thought AI does more harm than good, 46% thought AI did equal amounts of good and harm and 9% percent thought AI does more good than harm. The rest were undecided.

At a Sept. 26 briefing to the Senate’s Labor & Commerce Committee, Stephanie Beers, assistant counsel for Microsoft, said the corporation conducted an internal poll that showed 70% of Microsoft’s employees wanted to use AI to help with their workloads, while 50% were concerned about losing their jobs to AI.

“This is a needle that we all need to thread,” Beers said.

Defining AI, by law

A basic piece of any bill in Olympia is the definition of the legislation’s subject, and that gets tricky with “artificial intelligence.” There is no universal definition of the term. What’s the difference between a computer program that crunches data super-fast to spit out an answer and a computer program that actually thinks?

“It depends on who you ask. It’s all over the place, or depends on what people try to build. … It’s not clear,” said Noah Smith, a University of Washington computer scientist and professor working on the intersection of natural language processing, machine learning and computational social science.

That nebulous meaning is a challenge for writing laws.

“No one knows how to put their finger on a correct definition,“ Couture said.

The National Conference of State Legislatures has noted that bills supposedly pertaining to AI were introduced in 25 state legislatures in 2023 – but many of them addressed issues that appear to have nothing to do with artificial intelligence.

Keiser noted that individual state legislatures are dealing with AI issues in different ways. “It’s going to be a patchwork. Each state will be different,” Keiser said. The Biden administration also has just begun pondering this issue on a national level.

Even the bills under consideration in Olympia this year don’t agree on the definition of AI.

Couture’s and Nguyen’s companion bills define artificial intelligence as “technologies that enable machines, particularly computer software, to simulate human intelligence.” They also define "generative artificial intelligence" (the type that creates something new, such as a written story or a piece of art) as “technology that can mimic human ability to learn patterns from substantial amounts of data and create content based on the underlying training data, guided by a user or prompt.”

Shavers’ bill defines AI as “a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing a real or virtual environment technology that can mimic human ability to learn patterns from substantial amounts of data and create content based on the underlying training data, guided by a user or prompt.”

Couture’s and Nguyen’s bills acknowledge that legislators don’t have a good grasp on the scope and intricacies of artificial intelligence. Therefore, both bills call for a wide range of experts to figure out what is known, what is needed and how all that should be tackled.

“Often, we don’t know enough on what we don’t know,” Christiana Colclough, a Denmark-based AI consultant, told the Senate’s Labor and Commerce Committee on Sept. 26 via remote testimony.

UW’s Noah Smith said a wide cross-section of interests are needed to draw up legislation and regulations. He warned against allowing major high-tech corporations such as Microsoft, Google and Amazon to dominate such an effort, arguing that independent AI experts, such as from universities, would be needed in any effort to counteract the giants’ corporate influences. Microsoft declined to provide someone to be interviewed for this story.

Couture and Nguyen’s bills call for the creation of a 38-person study task force to begin meeting this year to come up with recommendations on how the Legislature and state government should address AI issues. Its preliminary recommendations would be due to the governor’s office and to the Legislature by Dec.1, 2025, with a final report due by June 1, 2027.

The proposed members would include legislators, state officials, business interests, tribes, advocacy organizations geared toward discrimination issues, consumer and civil liberties interests, teachers, law enforcement and universities.

But because the technology is evolving so quickly, strict legal definitions could become problematic, said the Washington Technology Alliance’s Ruderman.

“How long will today’s definition last? You have to be careful in setting definitions into law,” Ruderman said.

Meanwhile, Keiser believes addressing transparency is a good small step to begin regulating AI. She plans to introduce a bill requiring companies to inform their employees and consumers that an AI is interacting with them.

Cherika Carter, secretary-treasurer of the Washington State Labor Council, AFL-CIO, agreed. “Workers should know when AI is used in the workforce so they are aware of who and what they are interacting with,” Carter said.

Finally, Shavers has introduced a bill to forbid the use of AI algorithms that lead to discrimination by gender, race, ethnicity or other demographic factors. He sees this as a toehold into AI ethics – another field in which there is no universal code of conduct.

“How do we find what is ethical in the use of artificial intelligence?” Shavers said.

Correction: Kelly Fukai's name was incorrectly spelled in an earlier version of this story. This story has been corrected.